Edge Computing is reshaping how modern applications respond at the speed of data, bringing computation closer to where things actually happen. This shift enables real-time applications with lower latency, higher reliability, and enhanced privacy. Latency reduction becomes a core benefit as data is processed near the source. Together with edge AI, these capabilities empower smarter sensors, on-device inference, and autonomous decision-making at the edge. As the IoT optimization wave grows, this approach supports modernization across industries by enabling efficient, privacy-preserving data handling at the edge.

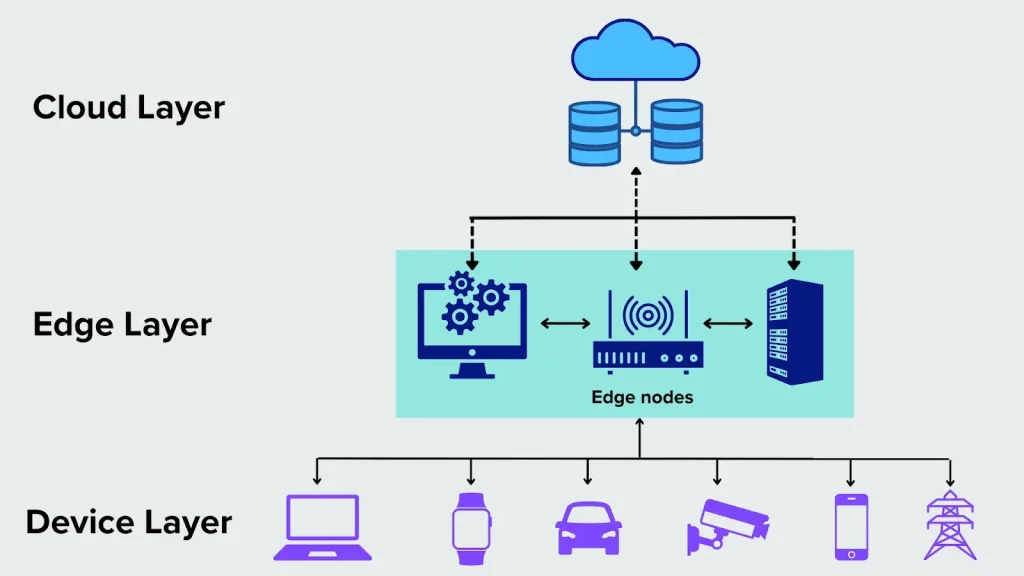

Think of this as distributed processing at the network edge, bringing compute, analytics, and AI closer to the data source. Practically, teams deploy local computing on edge nodes, gateways, or fog-like layers to run analytics and trigger events without always pinging cloud. This fringe architecture reduces reliance on centralized data centers and remains resilient when connectivity is spotty. In this cloud-to-local continuum, orchestration and security practices govern the lifecycle, with a sharpened focus on latency, bandwidth, and privacy. Edge computing, in other words, describes the same trend of moving intelligence to the edge to improve response times and privacy.

Edge Computing and Real-Time Applications: Reducing Latency at the Source

Edge computing brings compute and intelligence closer to data sources, enabling real-time applications to operate with deterministic latency. By processing data at the edge—on sensors, cameras, or local servers—organizations achieve faster feedback loops, lower latency, improved reliability, and enhanced privacy. This proximity also boosts IoT optimization since only the most valuable data travels to the cloud, freeing bandwidth for critical tasks.

A cloud-edge continuum design balances local decision making with centralized analytics. Edge computing helps in manufacturing, transportation, healthcare, and smart cities by shortening the path data must travel and enabling faster insights. As described in the broader discussion, moving compute closer to data sources unlocks faster insights and better user experiences for real-time applications.

Latency Reduction as a Core Benefit: Edge AI and Real-Time Inference at Scale

Real-time applications demand near-instantaneous processing with deterministic latency. By performing inference and processing at the edge, organizations can cut cloud round-trips from tens or hundreds of milliseconds to single-digit milliseconds, enabling safer autonomous operations and more responsive control systems. Latency reduction is a tangible benefit that translates into smoother interactions and faster anomaly detection.

Edge AI accelerates decisions by running optimized models on edge devices or lightweight servers. This on-device inference preserves privacy, reduces network dependency, and supports offline operation when connectivity is intermittent. As models mature, edge AI becomes a continuum where training occurs in the cloud while inference runs locally at the edge, reinforcing a real-time, resilient architecture.

IoT Optimization Across Industries with Edge Architectures

IoT optimization at the edge is a central driver of expansion. Industrial IoT networks generate vast streams of telemetry, video, and sensor data, and edge-enabled architectures can filter, summarize, and compress data at the source. This reduces bandwidth, lowers costs, and speeds time-to-insight for frontline operators in manufacturing, healthcare, agriculture, logistics, and beyond.

With edge architectures, organizations ensure critical alerts and insights are generated locally. Edge devices monitor vitals or environmental conditions and trigger immediate actions, while cloud services retain long-term analytics. Across industries, IoT optimization at the edge supports scalable, compliant, and responsive operations.

Architectural Patterns for the Edge and the Cloud–Edge Continuum

Architectural patterns for the edge span device edge, gateway or regional edge, and a cloud-edge continuum. Device edge handles sensing, filtering, and lightweight inference; gateway edge aggregates data and runs heavier analytics; and the cloud-edge continuum provides long-term storage, model training, and orchestration while preserving low-latency paths for real-time tasks.

In distributed or multi-edge mesh deployments, sites collaborate to balance load, maintain redundancy, and provide local failover. These patterns enable reliable real-time apps across geographies, while maintaining coherence with centralized cloud workloads and governance policies.

Containerization, Orchestration, and Edge DevOps for Scalable Real-Time Apps

Containerization and orchestration are foundational to scaling edge deployments. Edge Kubernetes distributions, lightweight containers, and edge-oriented CI/CD pipelines enable consistent deployment of microservices, AI inference services, and data processing across dispersed sites. This approach preserves cloud-native benefits while adapting to the constraints of edge hardware and intermittent networks, supporting real-time applications at scale.

Edge DevOps emphasizes observability, security, and rapid updates. By applying cloud-native practices at the edge, teams can push updates, roll back failures, and monitor performance across edge locations. This improves reliability and accelerates IoT optimization by ensuring the right workloads run where latency matters most and can be updated safely.

Security, Privacy, and Compliance in Edge-Enabled Real-Time Systems

Security, privacy, and compliance are foundational in edge-enabled real-time systems. Distributed data processing expands the threat surface, so security must be embedded in every layer—from device authentication and secure boot to encrypted data in transit and at rest. Privacy-preserving architectures lean toward edge processing to minimize data exposure, aligning with regulated industries such as healthcare and finance.

A robust edge strategy includes IAM, secure supply chains, and ongoing monitoring across sites. Compliance requirements often favor keeping sensitive data local, but coordination with the cloud remains essential for governance and analytics. By planning governance and observability, organizations can realize secure, private, and scalable real-time experiences at the edge.

Frequently Asked Questions

What is edge computing and how does it support real-time applications?

Edge computing processes data near its source, reducing data travel time and enabling real-time applications with deterministic latency. By performing compute and AI inference on devices, gateways, or regional edge servers, it keeps decisions local and improves reliability—even when network connectivity is intermittent.

How does latency reduction drive the adoption of edge computing for IoT optimization?

Latency reduction is a core benefit of edge computing. Moving processing closer to IoT devices allows data to be analyzed and acted on in single-digit milliseconds, avoiding cloud round-trips. This accelerates real-time IoT optimization across manufacturing, smart cities, and other connected environments.

What is edge AI and why is it pivotal for edge computing?

Edge AI means running AI inference at the edge rather than in the cloud. This enables fast, private, on-device decisions and reduces dependency on network connectivity, making edge computing ideal for predictive maintenance, security, and real-time analytics.

Which architectural patterns are commonly used in edge computing deployments?

Common patterns include device edge (sensors and microcontrollers), gateway or regional edge (edge servers coordinating data), cloud-edge continuum (long-term storage and training), and multi-edge mesh for distributed sites. Containerization and lightweight orchestration help manage workloads across the edge.

How does edge computing support IoT optimization across industries?

Edge computing supports IoT optimization by filtering, summarizing, and compressing data at the source, sending only valuable insights to the cloud. This reduces bandwidth, lowers costs, and speeds time-to-insight, enabling industries like healthcare, agriculture, logistics, and manufacturing to act on data in real time.

What security and governance best practices are essential for edge computing?

Security by design is essential for edge deployments: implement IAM, secure boot, encrypted data at rest and in transit, and continuous monitoring. A robust edge strategy also covers secure software supply chains, observability, and regulatory compliance for local data processing.

| Topic | Key Points | Examples / Notes |

|---|---|---|

| Definition | Processing at or near the data source to reduce latency and enable near real-time decisions | Near sensors, cameras, vehicles across industries; 5G, IoT drive expansion |

| Real-Time Applications | Requires near-instantaneous processing with deterministic latency | Manufacturing robotics, autonomous systems, smart cities analytics |

| Latency Reduction | Lower delays by moving compute closer to data sources; single-digit ms | Real-time AR/VR, anomaly detection in manufacturing |

| Edge AI | Inference runs on edge devices; privacy and resilience improve with local processing | Predictive maintenance, facial recognition in access control, robotics |

| IoT & Industry | Filter and summarize at source; reduce bandwidth; accelerate time-to-insight | IIoT telemetry, healthcare vitals locally, agriculture irrigation control |

| Architectural Patterns | Device edge, gateway/regional edge, cloud-edge continuum, multi-edge mesh | Low-latency real-time tasks; scalable distributed deployments |

| DevOps & Security | Containerization and orchestration enable scalable, repeatable deployments; security-by-design | Edge Kubernetes, CI/CD for edge workloads, secure boot, IAM |

| Implementation & Governance | Use-case mapping, network latency budgets, hardware selection, data governance, observability | Offline modes, reliability, data locality |

| Challenges | Operational complexity, intermittent connectivity, model updates, data consistency | Automation, robust update strategies, synchronization strategies |

| Future Outlook | Stronger cloud-edge integration, on-device AI, richer orchestration, industry accelerators | Cloud-edge continuum, scalable edge stacks |

Summary

Edge Computing is reshaping how real-time applications are designed, deployed, and operated. By bringing compute closer to data sources, organizations can achieve meaningful latency reductions, unlock edge AI capabilities, and optimize IoT ecosystems across industries. As hardware, software, and network capabilities advance, Edge Computing will become a foundational layer in modern, responsive, and secure real-time systems. For teams focusing on performance and user experience, the question is not whether to adopt Edge Computing, but how to craft an edge strategy aligned with business goals, compliance, and the realities of distributed compute.